This article aims to illustrate how to fine-tune a top-performing LLM efficiently and cost-effectively on a custom dataset. We will explore the utilization of the Falcon-7B model with LoRA adapters using Lit-GPT.

Ever wondered what it would be like to have a digital twin? A virtual replica of yourself that can have conversations, learn, and even reflect your thoughts? Recent advances in artificial intelligence (AI) have made this once-futuristic idea attainable.

The AI community’s effort has led to the development of many high-quality open-source LLMs, including but not limited to Open LLaMA, Falcon, StableLM, and Pythia. You can fine-tune these models on a custom instruction dataset to adapt to your specific task, such as training a chatbot to answer financial questions. Furthermore, it can also provide a data privacy advantage when data cannot be uploaded or shared with cloud APIs.

In my case, I wanted the model to learn to speak my style by imitating me, using my jokes and filler words.

Data collection and preparation

Before we dive into the details, I’d like to point out that fine-tuning GPT-like models can be quite tricky. Nevertheless, I made the decision to take it a step further and train the model in the Russian language:

• This presents an additional challenge since models are primarily trained on English texts.

• Given that Russian is my native language, I possess a vast dataset comprising my personal correspondences.

Data collection

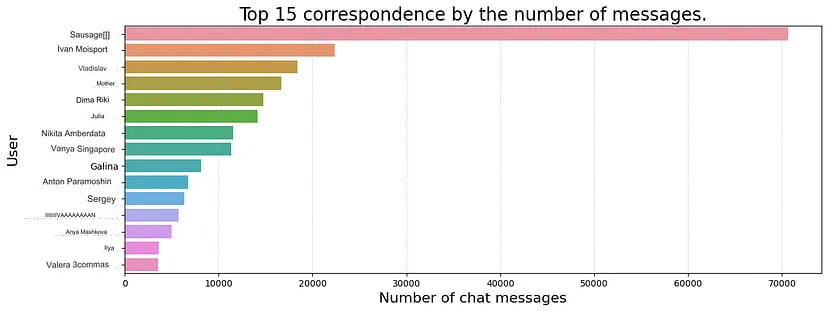

I chose Telegram because it provides a convenient API for data collection. Additionally, it serves as the primary platform for most of my communications with friends. This choice provides a valuable dataset that allows the model to gain a deeper understanding of my unique communication style and enables it to mimic me more effectively.

Following the documentation, I wrote a little script that downloads all correspondence from private chats and saves them to a file:

- Initiate the Telegram client:

python from telethon.sync import TelegramClient client = TelegramClient(PHONE_NUMBER, TELEGRAM_APP_ID, TELEGRAM_APP_HASH) client.start() - Get a list of dialogs by filtering groups and channels:

python def get_dialogs(limit: int | None = 100) -> list[Dialog]: """Get all dialogs from the Telegram.""" dialogs: list[Dialog] = client.get_dialogs(limit=limit) dialogs = [dialog for dialog in dialogs if dialog.is_user] # remove groups or channels logger.info(f"Found {len(dialogs)} dialogs") return dialogs - Download the correspondence history:

def parse_messages(dialog: Dialog, limit: int = 1000) -> list[dict]: """Get all messages from the dialog.""" all_messages_list = [] offset_id = 0 while True: messages: list[Message] = client( GetHistoryRequest( peer=dialog, offset_id=offset_id, offset_date=None, add_offset=0, limit=limit, max_id=0, min_id=0, hash=0, ) ).messages if not messages: break all_messages_list.extend( { "date": message.date.isoformat(), "message": message.message, "out": message.out, } for message in messages # Filter audio or video content if message.message and not message.is_bot ) offset_id = offset_id = messages[-1].id return all_messages_list

You can find the full script here.

It’s worth mentioning that I intentionally excluded audio and video messages from the dataset and focused solely on text-based content. As a result, some of the information in the dialogue may have been lost. Extracting text from such data is a comprehensive topic that would be better suited for a separate article.

Data preparation

At this point, you must carefully process the data in the instructions to fine-tune the LLM.

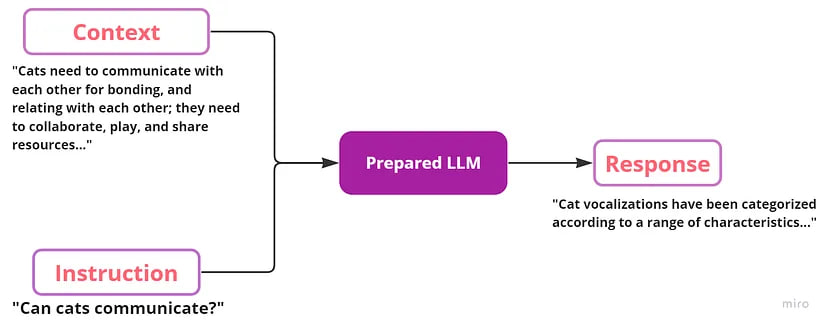

Fine-tuning typically involves training the pre-trained model to follow instructions or perform another specific target task (for example, sentiment classification). ChatGPT (which started as a fine-tuned version of the GPT-3 foundation model) is a typical example of a model that was fine-tuned to follow instructions. Instruction datasets usually have three keys: instruction, input (optional context for the given instruction), and the expected response from the LLM. Below is a sample example of instruction data:

[

{

"instruction": "Can cats communicate?",

"context": "Cats need to communicate with each other for bonding, and relating with each other; they need to collaborate, play, and share resources...",

"response": "Cat vocalizations have been categorized according to a range of characteristics...",

}

]

Schematically, the fine-tuning process can be represented as follows:

It’s important to remember that you can modify the data format to suit your needs. For example, you can input a function and ask the model to generate documentation as a response. However, based on my experience, smaller models (such as 7B) may struggle with complex prompts.

To overcome this, try simplifying the prompts or breaking them down into a series of sequential instructions. This way, you can achieve better results and improve the model’s performance.

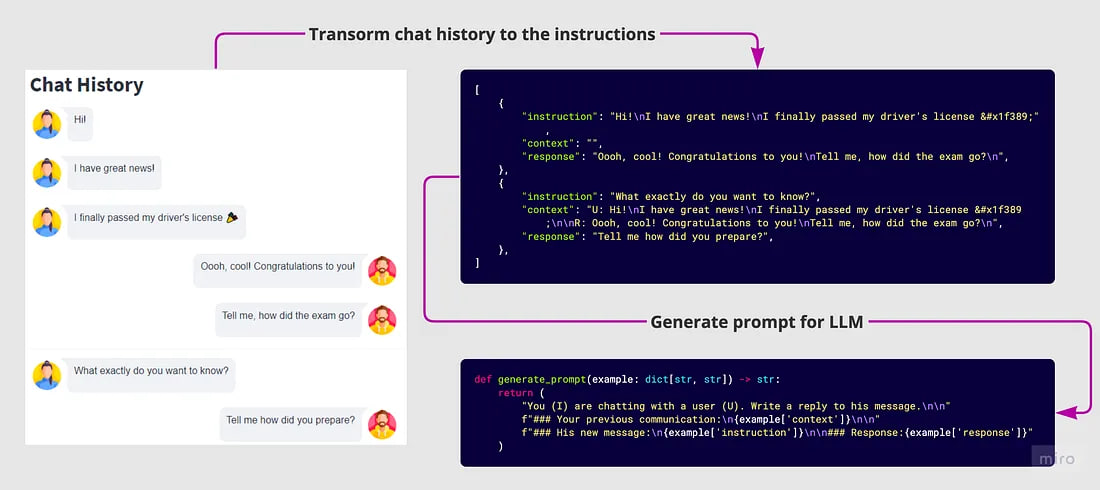

To construct instructions based on my chat, I employed several approaches:

- Splitting the conversation into batches when the time gap between two messages exceeded one day. This way, we consider it as the start of a new communication topic, and therefore, there will be no context from the previous conversation.

- Concatenating consecutive messages from the same user into a single message. As we know, some people tend to write multiple short messages in a row.

- Setting a maximum context length to speed-up the training process.

- Adding labels to my responses and the interlocutor’s responses to help the model better understand the context.

I also cleared the chat history of sensitive information such as personal passwords or emails.

I ended up with 51K instructions, which is comparable to Dolly 2.0 instruction dataset by Databricks (~15k instructions) and Alpaca dataset (~52K instructions).

Model

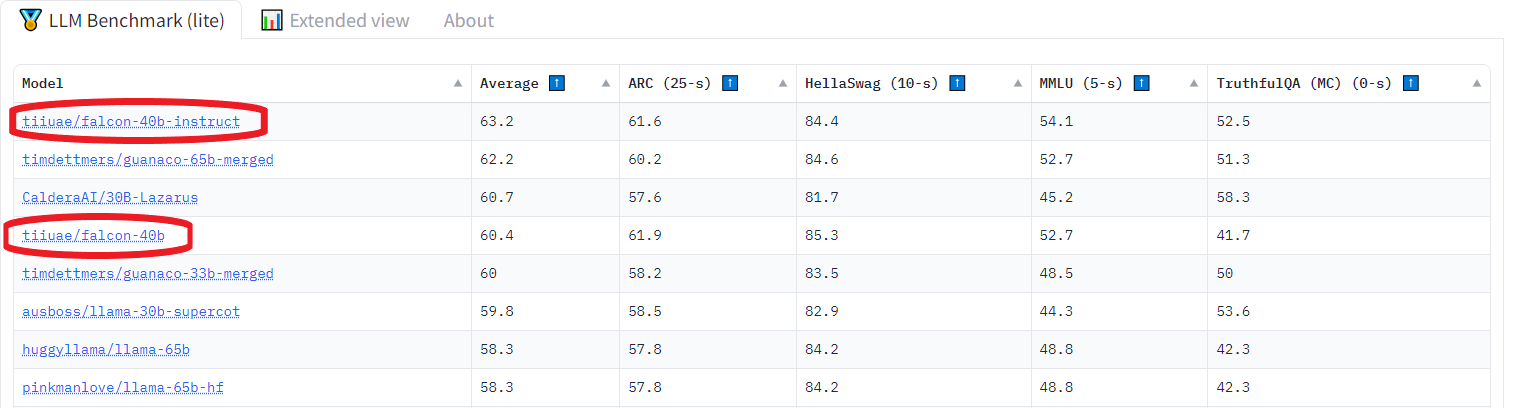

I decided to choose Falcon — the latest open-source large language model released by Technology Innovation Institute. It is an autoregressive decoder-only model with two variants: a 7 billion parameter model and a 40 billion parameter model. The 40B model variant was trained on 384 GPUs on AWS for 2 months.

Based on what is known about the model, Falcon architecture is very similar to GPT-3 and LLaMA, except for using multi-query attention (Shazeer 2019) and RefinedWeb corpus as a training dataset (which can be a key to success).

##Parameter-efficient LLM fine-tuning with LoRA

If we are considering ways to enhance LLM (Large Language Model) models, one valuable resource is the OpenAI article PALMS: Pre-training an Autoencoder Latent Model for Sequence Generation. The article discusses the use of fine-tuning, which involves retraining the model using the same techniques as the original training but with a lower learning rate ~0.1. This process allows us to train the model on our specific data, thereby improving its response in our desired domain.

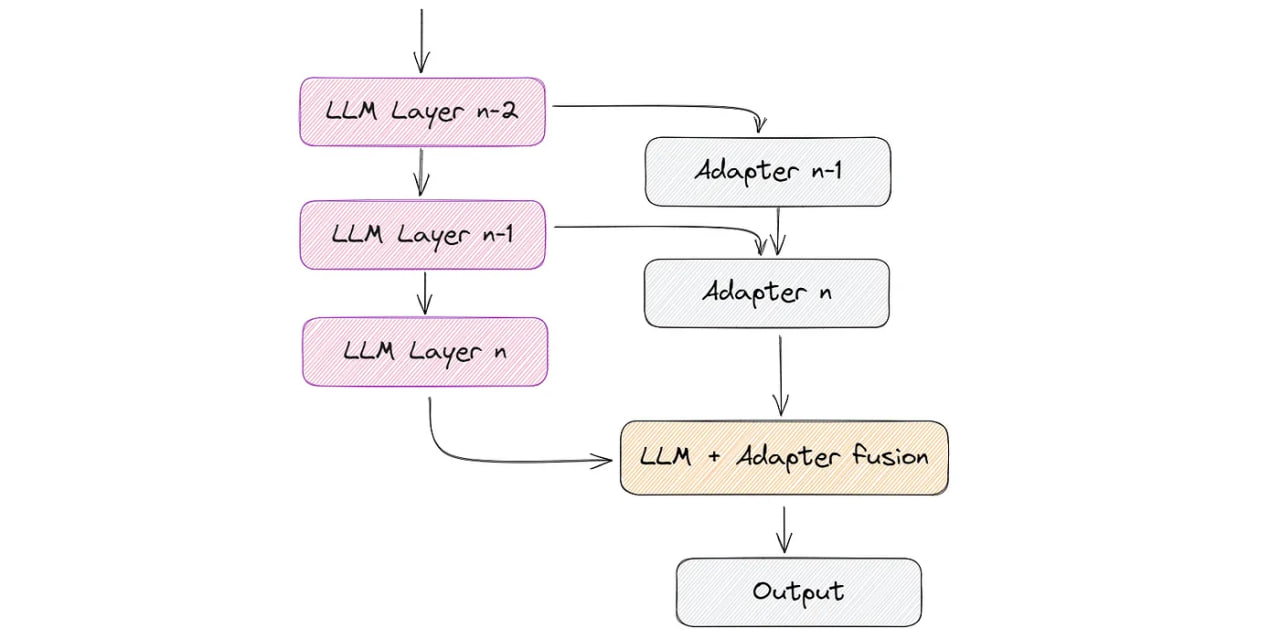

Apart from fine-tuning, there are other approaches, such as using adapters. Adapters involve adding additional smaller layers onto the existing layers of the original model, training only these newly added layers. This approach allows for faster learning as the weights involved are relatively small.

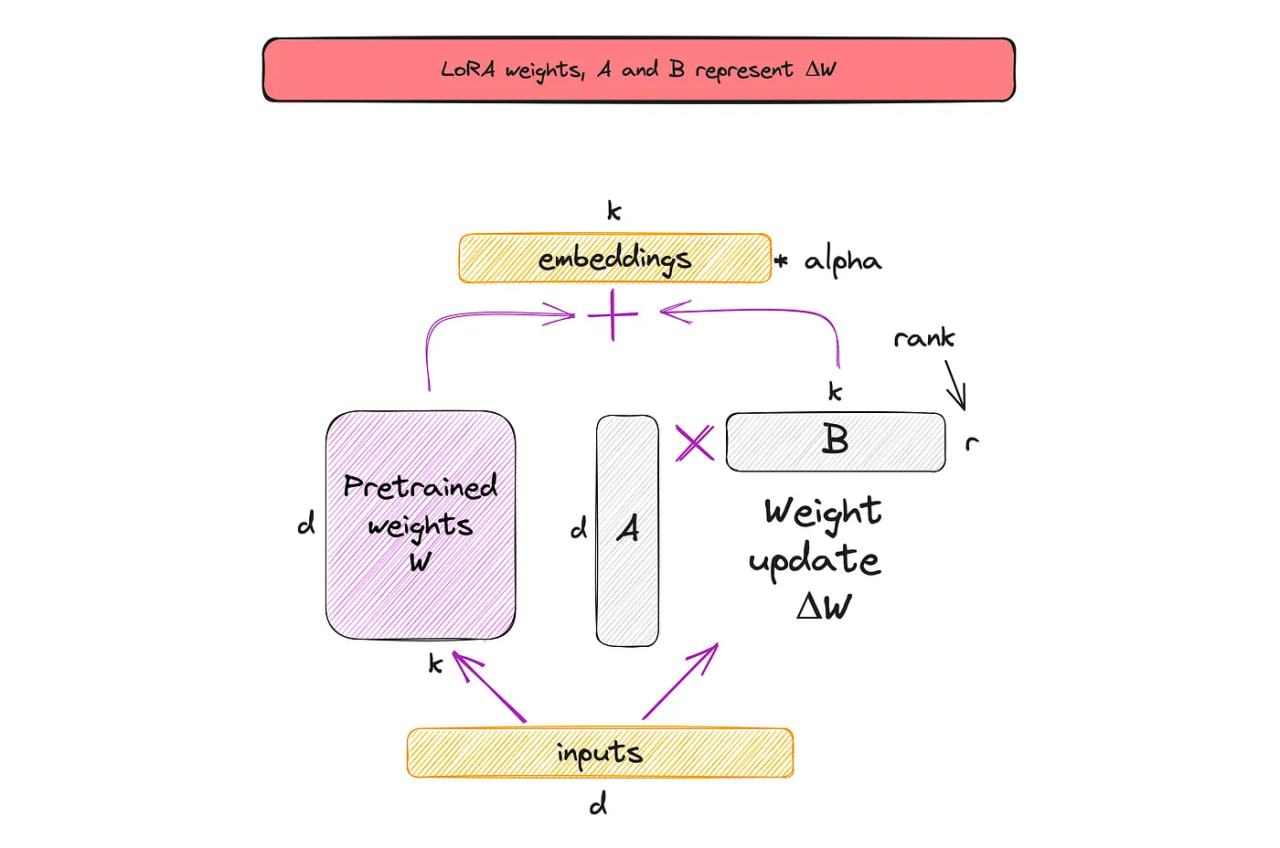

The concept of LoRA draws inspiration from observations regarding how matrix weights change during training, as highlighted in the work of Aghajanyan et al. (2020). These observations indicate that matrices can be effectively approximated using a lower-dimensional space while still preserving most of their essential information and structure.

Each matrix W is represented as the sum of W + A * B during training. The initial matrix W is frozen, and only the matrices A and B are trained. Consequently, the updated weights are obtained as ΔW = W + A * B. By ensuring that matrices A and B remain small, the learning process becomes faster and requires fewer resources. In a nutshell, this is the LoRA method, which is illustrated in the figure below.

Note that r, in the figure above, is a hyperparameter here that we can use to specify the rank of the low-rank matrices used for adaptation. A smaller r leads to a simpler low-rank matrix, which results in fewer parameters to learn during adaptation. Choosing a smaller r in LoRA has a trade-off between model complexity, adaptation capacity, and the risk of underfitting or overfitting.

For further details and in-depth information, I recommend the following resources:

• Scaling Down to Scale Up: A Guide to Parameter-Efficient Fine-Tuning

• Parameter-Efficient LLM Finetuning With Low-Rank Adaptation (LoRA)

Experiment

To conduct my experiments, I used the Lit-GPT library, which includes an implementation of open-source LLM and is powered by Lightning Fabric. As for the hardware setup, I used a single A100 GPU with a memory capacity of 40 GB.

Downloading the model weights

To initiate experiments, the first step involves downloading the model weights and converting them to the lit-gpt format. This is quite easy to do:

# download the model weights:

python scripts/download.py --repo_id tiiuae/falcon-7b

# convert the weights into a standardized form:

python scripts/convert_hf_checkpoint.py --checkpoint_dir checkpoints/tiiuae/falcon-7b

You can find the instructions to download other supported weights like RedPajama in this howto section.

Prepare the dataset

Fine-tuning involves two main steps- first: we process the dataset in the Lit-Parrot format and then we run the fine-tuning script on the processed dataset.

I modified the existing Alpaca script which provides prepare function that loads the raw instruction dataset, creates prompts, and tokenizes them. In my case, I needed to change the function to generation prompts:

def generate_prompt(example: dict[str, str]) -> str:

"""Generates a standardized message to prompt the model"""

return (

"You (I) are chatting with a user R. Write a reply to his message.\n\n"

f"### Your previous communication:\n{example['context']}\n\n"

f"### His new message:\n{example['instruction']}\n\n"

f"### Your response:{example['response']}"

)

After the changes, you can start the data preparation process:

python scripts/prepare_dataset_my.py \

--checkpoint_dir checkpoints/tiiuae/falcon-7b/

It does not take long to prepare prompts. In my case, it took only 2 minutes for 51k instructions:

Fine-tuning the Falcon model

Once you have prepared your dataset, it is pretty straightforward to finetune the model.

I changed some parameters in the fine-tuning script for better results, here is an overview of the hyperparameter settings I used:

bfloat16 precision (I wrote more about bfloat16 in the article 7 ways to speed up inference of your hosted LLMs).

• Also, the scripts were configured to train the models for 51k iterations using an effective batch size of 128 with gradient accumulation (more details on gradient accumulation in article Finetuning LLMs on a Single GPU Using Gradient Accumulation).

• For LoRA, I used a rank of 16 to get a higher quality trained adapter. And set alpha to 32 (alpha is a scaling factor that adjusts the magnitude of the combined result, this balances the pretrained model’s knowledge and the new task-specific adaptation).

Then you need to run the finetune/lora.py script by providing your data path.

python finetune/lora_my.py \

--checkpoint_dir checkpoints/tiiuae/falcon-7b/ \

--data_dir data/falcon/ \

--out_dir out/falcon \

--precision bf16-true

Monitor the fine-tuning

You can use the Linux watch command to repeatedly run nvidia-smi every half second:

watch -n 0.5 nvidia-smi

You can find the model checkpoints in the out/falcon folder and use the generation script to play around with the model.

It takes approximately 10 hours and 30 GB memory used to finetune the model on a single A100 GPU. Moreover, it is worth noting that the adapter itself is lightweight, weighing only 40MB. This is significantly smaller compared to the Falcon model, which has a size of 16GB.

Running inference with the fine-tuned model

You can use the fine-tuned checkpoint of your LLM for generating texts. Lit-Parrot provides generation scripts. It supports int8 and int4 quantization for devices with less GPU memory, also you can change precision and use several multiple GPU devices:

python generate/lora.py \

--checkpoint_dir checkpoints/tiiuae/falcon-7b \

--lora_path out/falcon/lit_model_lora_finetuned.pth \

--prompt "What happened to you? Tell me" \

--max_new_tokens 300

--precision bf16-true

In my case, I ran the model on 1 GPU device, without quantization and with bfloat16 precision. I also changed the original lora script and split it into two parts:

- Web interface using streamlit and streamlit-chat to test the model faster. You can find my version here.

- RestAPI using FastAPI web framework for model inference. This allowed to load the model into memory once and then use it again.

Demo (I translated the text to make it clear):

It is important to note that this is one of the best examples that I got. The others were noticeably worse.

The model’s response time, even without quantization, was remarkably fast at 45.51 tokens per second. If you are looking to accelerate text generation or minimize memory usage, I recommend checking out my previous article 7 ways to speed up inference of your hosted LLMs.

Quality Comparison

While a detailed performance benchmark on real-world tasks is out-of-scope for this blog article, I can share my personal observations regarding the utilization of fine-tuned models.

During testing, I got peculiar behavior such as the generation of unrelated text, occasional disregard for context, and difficulties in maintaining coherent dialogue.

My feeling is that this can be fixed in several ways:

• Enhance data cleaning processes to ensure higher data quality.

• Incorporate additional annotated dialogue datasets.

• Increase LoRA rank from 16 to 32.

• Utilize a model with a larger size, such as Falcon-40B.

• Reduce the length of the context or simplify it.

• Simplify prompts to provide clearer instructions.

Limitations

While Lit-GPT offers a wide range of functionalities, I would suggest primarily utilizing it for hypothesis testing purposes. In my view, it is not yet fully prepared for production use. For instance, as of the time of writing this article, Lit-GPT lacks a built-in implementation for converting the model back to the HuggingFace format. However, it is still possible, and the library authors propose a couple of solutions:

- Do a reverse conversion for each of the HuggingFace classes.

- Сreating a HF Transformer model version of

lit_gpt.model.

Note that the first method does not support LoRA and Adapter modifications.

Keep these limitations in mind when developing your solution. If you are fine-tuning LLMs for production, I recommend doing so using pure PyTorch. You can refer to this article by Amazon for more information.

Conclusion

The ability to fine-tune LLMs using just one GPU and a few hours is truly impressive. You can build many small LoRA modules for different tasks. When these fine-tuned models are deployed for real-time inferencing, you just need to load the same base model once. Given the physical size of an LLM at 100+ GB, this advantage cannot be ignored.

However, it is essential to approach the process with realistic expectations. It is likely that experimentation with various hyperparameters will be necessary to achieve optimal results. Additionally, dataset annotation and cleaning are crucial steps to ensure the best outcomes. Also pay attention to what data the LLM was trained on, and check benchmarks for tasks similar to yours.

By following these steps diligently, I am confident that excellent results can be achieved!